In the ever-evolving realm of technology, few domains have made as profound an impact as machine learning (ML) and deep learning (DL). These cutting-edge fields, nestled within the broader landscape of artificial intelligence, have witnessed a meteoric rise in popularity in recent years.

As organizations across the globe harness the computing power out of these technologies to fuel innovation and enhance decision-making, it’s imperative to understand the crucial role that Graphics Processing Units (GPUs) play in making this transformative leap possible.

Brief Overview of Machine Learning and its Increasing Popularity

Machine learning, a subset of artificial intelligence, revolves around the development of algorithms that enable computers to learn from and make predictions or decisions based on data. Unlike traditional software that relies on explicit instructions, ML systems can adapt and improve their performance through experience, thanks to their ability to recognize patterns and correlations in large datasets.

Over the past decade, machine learning has undergone an unprecedented surge in popularity.As organizations strive to gain a competitive edge, machine learning has emerged as a vital tool in diverse domains, from healthcare and finance to marketing and autonomous vehicles.

This surge in popularity is driven by the promise of automating tasks, uncovering hidden insights, and facilitating data-driven decision-making.

Use of GPU in Machine Learning

At the heart of the machine learning revolution lies the indispensable role of Graphics Processing Units or GPUs. Originally designed for graphics and gaming, GPUs have evolved into essential components for machine learning (ML) and deep learning (DL).

Their parallel processing capabilities and capacity to handle extensive data make them invaluable for ML tasks.

Deep learning and ML models, including complex deep neural networks, demand the processing of vast data through intricate mathematical operations.

GPUs are well-suited for this parallelizable machine learning workloads however, whereas CPUs, designed for general-purpose computing, struggle with the repetitive matrix multiplications and intricate computations at the core of ML algorithms.

What is a Graphics Processing Unit?

Definition and Explanation of GPUs (Graphics Processing Units)

Graphics Processing Units (GPUs), originally tailored for graphics-intensive applications, have evolved into versatile, high-performance devices.

hese processors, known for their parallel processing architecture, now play a pivotal role in complex computational tasks, notably in machine learning and deep learning.

Their ability to execute multiple tasks simultaneously, initially designed for rendering graphics, has transformed them into essential tools in the field of artificial intelligence.

Comparison of GPUs with CPUs (Central Processing Unit)

In the context of the entertainment industry, GPUs, with their parallel architecture and numerous cores, excel in parallel processing, offering exceptional efficiency for tasks involving extensive datasets and complex computations.

Unlike their counterpart, Central Processing Units (CPUs), which excel in single-threaded high performance computing and general-purpose tasks, GPUs outperform CPUs significantly in use of gpu in machine learning in terms of speed and efficiency, especially when it comes to training deep neural networks with extensive matrix multiplications.

In the context of many machine learning applications, where the same process of training deep neural networks involves vast matrix multiplications, GPUs outperform CPUs by orders of magnitude in terms of speed and efficiency.

Importance of Parallel Processing in Machine Learning

The importance of parallel processing in machine learning cannot be overstated. Many machine learning algorithms, particularly deep learning models, involve complex operations on large matrices and data sets.

These tasks can be broken down into numerous parallel sub-tasks, making them a perfect match for GPU architecture. Parallel processing allows machine learning practitioners to train models more quickly and process larger datasets with ease, ultimately leading to faster iterations and more sophisticated models.

Without GPUs and their parallel computing capabilities, the rapid advancements and breakthroughs witnessed in the field of machine and deep learning projects in recent years would not have been possible.

Parallelism is the driving force behind the effectiveness of GPUs in machine and deep learning processes, enabling data scientists and researchers to harness the true potential of artificial intelligence.

The Importance of GPUs in Deep Learning Models

GPUs and Their role in Accelerating Machine Learning Algorithms

In the realm of machine learning, the importance of Graphics Processing Units (GPUs) cannot be overstated. GPUs play a pivotal role in accelerating machine learning algorithms by leveraging their parallel processing power.

Machine learning models, especially deep neural networks, involve intricate mathematical computations that can be dauntingly time-consuming when executed sequentially. GPUs are equipped with thousands of small, efficient cores that can perform these computations simultaneously, dramatically speeding up the training process.

This parallelism translates into shorter development cycles and more efficient model optimization, allowing researchers and data scientists to explore complex algorithms and ideas with unprecedented ease.

GPU’s Capability to Handle Large Datasets and Complex Computations

Machine learning’s relentless quest for innovation is often stymied by the limitations of handling extensive datasets and complex computations. GPUs shine in this department, offering a remarkable capability to tackle these challenges.

As the volume of data continues to grow exponentially, GPUs provide the computational muscle needed to process, manipulate, and analyze massive datasets with remarkable agility.

Their ability to handle intricate computations, such as matrix multiplications and gradient calculations, is a key factor in training deep learning models effectively.

This enables machine learning practitioners to delve into intricate problems and work with richer training data, unleashing the full potential of their algorithms.

Benefits of Using GPUs: Faster Training, Improved Performance, and Energy Efficiency

Incorporating GPUs into machine learning yields a multitude of benefits. Training times, once measured in weeks or months on traditional CPUs, are significantly reduced to hours or days with GPUs, expediting solution development and deployment.

Beyond speed, GPUs enhance model performance by facilitating experimentation with more complex architectures and larger datasets.

Additionally, they offer energy efficiency, reducing power consumption per computation, making them environmentally friendly. The combination of faster training, improved performance, and energy efficiency solidifies GPUs as indispensable tools in the world of artificial intelligence, enabling innovation, problem-solving, and the exploration of new AI frontiers.

Introduction to Deep Learning

Definition and Explanation of Deep Learning

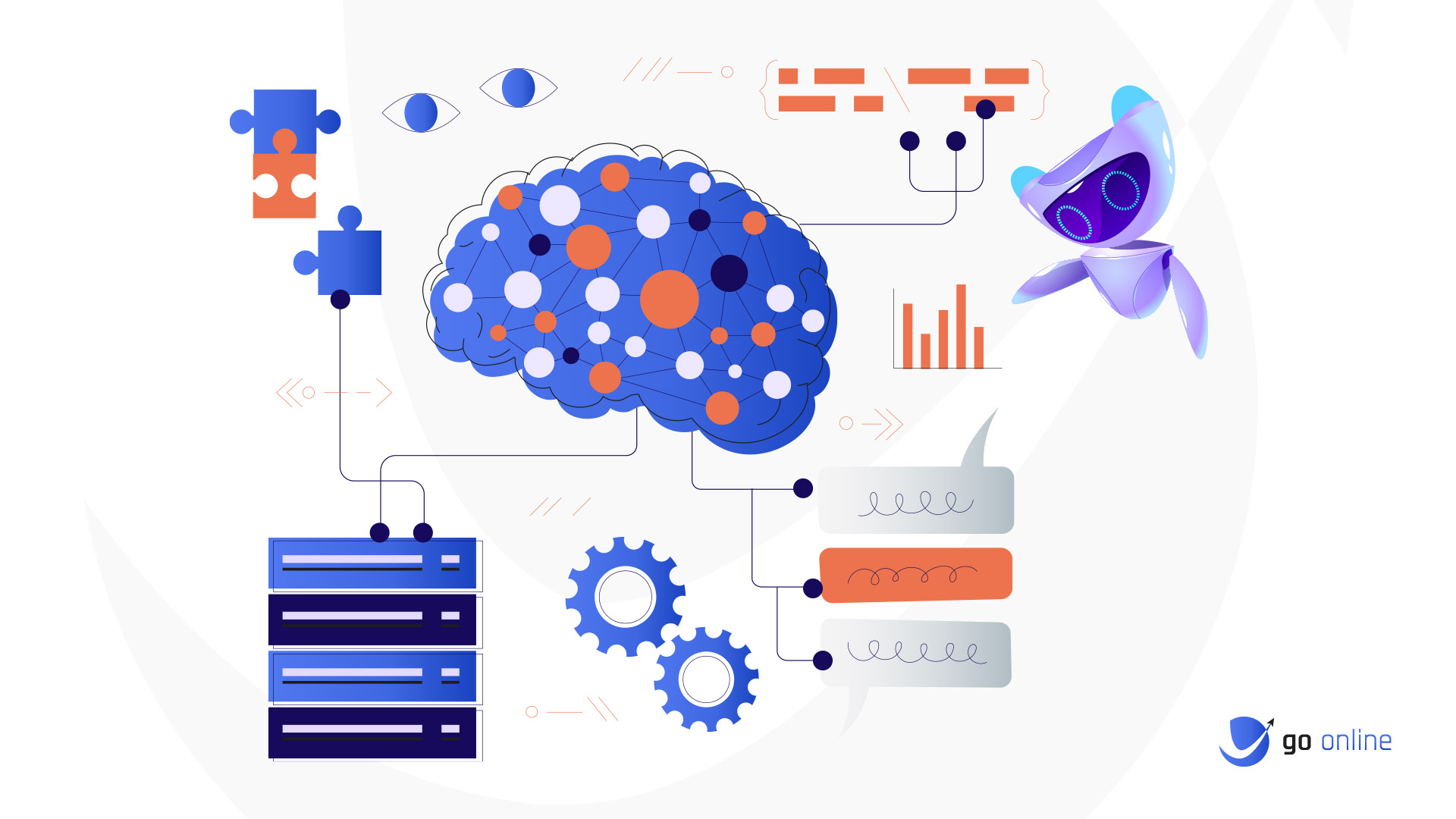

Deep learning, a subfield of machine learning, is a cutting-edge approach to artificial intelligence that seeks to mimic the workings of the human brain. At its core, deep learning involves the utilization of artificial neural networks, which are inspired by the structure and function of biological neurons. These networks consist of multiple layers of interconnected nodes, known as neurons, which process and transform data in a hierarchical manner.

Deep learning models, often referred to as deep neural networks, are distinguished by their depth, with many hidden layers between the input and output layers. This depth enables them to learn intricate patterns and representations from data, making them exceptionally adept at tasks like image and speech recognition, natural language processing, and even playing complex games.

Why Does Deep Learning Relies Heavily on GPUs

Deep learning’s proficiency in solving complex problems comes at a computational cost, and it’s here that Graphics Processing Units (GPUs) play an integral role. The remarkable performance of deep neural networks hinges on their capacity to process vast amounts of data and execute numerous mathematical operations simultaneously.

This level of parallelism aligns perfectly with the architecture of GPUs, which consist of thousands of small, highly efficient cores designed for parallel computing.

By distributing the computational workload of deep learning model across these cores, GPUs dramatically accelerate the training of deep learning models, leading to quicker convergence and more efficient optimization.

Without GPUs, the training of deep neural networks on conventional Central Processing Units (CPUs) would be prohibitively slow, hindering the advancement of deep learning applications.

Examples of Deep Learning Applications

Deep learning has revolutionized a multitude of fields, driving innovation and introducing new possibilities in various applications.

- In computer vision, deep learning is responsible for breakthroughs in image classification, object detection, and facial recognition.

- Autonomous vehicles rely on deep learning algorithms for tasks such as lane keeping, obstacle detection, and even self-driving capabilities.

- In natural language processing, deep learning underpins the development of chatbots, language translation, and sentiment analysis.

- In healthcare, it aids in disease diagnosis and drug discovery.

- In the entertainment industry, the use of GPUs is transforming various aspects, such as recommendation systems and the creation of deepfake content.

These examples merely scratch the surface of the vast and ever-expanding landscape of deep learning applications, demonstrating its pervasive influence in our technologically driven world.

GPUs for Deep Learning

How GPUs Have Revolutionized Deep Learning?

The role of Graphics Processing Units (GPUs) in deep learning cannot be overstated; it has revolutionized the field in profound ways. At the heart of this transformation is the parallel processing prowess of GPUs. Deep learning models, particularly deep neural networks, are characterized by their depth and complexity.

Training these intricate models involves an enormous number of mathematical operations and the processing of massive datasets. GPUs, with their parallel architecture consisting of thousands of small, efficient cores, excel at handling these tasks simultaneously.

This parallelism results in significant time savings during training and enables data scientists and researchers to experiment with more ambitious models. The ability to swiftly iterate through various architectures and hyperparameters has accelerated the pace of innovation in deep learning, opening the door to solving complex problems and unlocking new horizons.

Analysis of GPU’s Ability to Handle Deep Neural Networks and Complex Models

Deep neural networks, with their numerous layers and parameters, demand a considerable amount of computational power. GPUs are uniquely suited to handle this computational load. Their architecture allows for the efficient execution of matrix multiplications, backpropagation, and optimization algorithms that are fundamental to training deep neural network models.

This capability ensures that even the most complex neural networks can be trained in a reasonable timeframe. As a result, GPU-accelerated deep learning has enabled the development of state-of-the-art models in fields like computer vision, natural language processing, and reinforcement learning, and has empowered researchers to tackle problems of unprecedented complexity.

Case Studies Highlighting the Performance Improvement with GPU Utilization

Image Classification Advancements

In the domain of image classification, deep learning models have undergone a remarkable transformation with the integration of GPUs. Convolutional neural networks (CNNs), a cornerstone of image classification, have achieved unprecedented accuracy and efficiency gains.

GPU-accelerated training allows these networks to process vast datasets and intricate image features, significantly reducing training times.

A task that once consumed weeks on a conventional CPU can now be accomplished in mere hours. This acceleration has far-reaching implications, extending from improved object recognition in robotics to enhancing medical image analysis, and has set the stage for breakthroughs in various computer vision applications.

NLP and Real-Time Language Processing

Natural language processing (NLP) applications have also witnessed a significant performance boost through GPU utilization. Complex recurrent neural networks (RNNs), pivotal in language translation and sentiment analysis, benefit immensely from GPU acceleration.

The ability to parallelize matrix multiplications and sequence processing tasks is paramount in achieving real-time language understanding. GPU-accelerated NLP models have revolutionized communication tools, empowering chatbots to offer instant responses and facilitating real-time language translation services.

This not only enhances user experiences but also opens doors to improved customer service and global communication on an unprecedented scale.

Choosing the Right GPU for Machine Learning

Factors to Consider When Selecting a GPU for Machine Learning

Selecting the right Graphics Processing Unit (GPU) for machine learning is a pivotal decision that can significantly impact the efficiency and effectiveness of your deep learning projects afterwards. Several critical factors should guide this choice.

First and foremost is the GPU’s compute power, which is measured in terms of Floating-Point Operations Per Second (FLOPS) and CUDA cores.

The more FLOPS and CUDA cores a GPU has, the faster it can perform complex mathematical calculations, crucial for training deep learning models. Memory capacity, both onboard GPU RAM and system RAM, is another essential consideration, as it determines the size of datasets and models you can work with.

Comparison of Different GPUs Available in the Market

The market offers a wide range of GPUs suitable for machine learning tasks, each with its unique strengths and specifications. NVIDIA’s GeForce and Quadro series are popular choices, with the latter tailored more towards professional applications and the former being a cost-effective option for individuals.

The Tesla series, designed explicitly for data centers, excels in terms of performance and energy efficiency. Additionally, AMD GPUs, such as the Radeon and Instinct series, are gaining traction for machine learning applications due to their competitive pricing and high compute power.

It’s essential to weigh the specific requirements of your project against the features and pricing of these various options to make an informed decision.

Recommended GPUs for Different Machine Learning Requirements

The choice of GPU should align with the unique demands of your machine learning tasks. For entry-level and educational purposes, NVIDIA’s GeForce GPUs, like the GTX series, offer high performance computing excellent value for the money. For research and small-scale projects, the NVIDIA Quadro series is a reliable choice, boasting robust performance and compatibility.

In data center environments, the NVIDIA Tesla series shines with its exceptional compute, power consumption and energy efficiency, making it suitable for deep learning at scale. AMD’s Radeon and Instinct series cater to users looking for alternatives, especially in terms of cost-efficiency and competitive compute power.

Ultimately, selecting the right GPU for machine learning hinges on a careful assessment of your specific project requirements, budget, and long-term scalability goals.

Challenges and Limitations of GPUs in Machine Learning

Discussion on the Limitations of GPUs, Such as Memory Constraints

While Graphics Processing Units (GPUs) have proven to be a game-changer in the realm of machine learning, they are not without their limitations. One of the most prominent challenges is the memory constraint.

Deep learning models continue to grow in size and complexity, pushing the boundaries of available GPU and memory bandwidth.

Training large models or processing massive datasets often requires significant memory resources, which can be a bottleneck for GPU-based systems. While some GPUs offer substantial onboard memory, it may not always suffice for extremely large models or datasets.

This constraint can force data scientists to compromise on model size or batch size, potentially affecting the quality and efficiency of machine learning tasks.

Current Developments and Future trends in GPU Technology for Machine Learning

In response to the limitations of GPUs in machine learning, there have been notable developments and ongoing trends in GPU technology. Manufacturers like NVIDIA and AMD continue to release GPUs with larger memory capacities to address the various memory bandwidth constraints.

High-end deep learning GPUs, like the NVIDIA A100 and the AMD Instinct MI100, offer substantial onboard memory and enhanced data processing capabilities. Moreover, AMD and Nvidia GPUs are evolving to be more versatile, with the integration of features like Tensor Cores for AI workloads, enabling more efficient matrix operations.

Conclusion

The transformative role of Graphics Processing Units (GPUs) in the realms of machine learning and deep learning cannot be understated. These powerful processors have elevated the fields to new heights, enabling the development of intricate models and applications that were once considered unattainable.

The importance of GPUs lies not only in their present capabilities but also in their promising future. As memory constraints are being addressed, and new technologies like AI accelerators and quantum computing emerge, GPUs are poised to evolve into even more efficient and versatile tools for machine learning practitioners.

Their integration with machine learning and AI technologies is expected to continue, offering boundless opportunities for innovation and problem-solving.